Securing the workloads running in your Kubernetes cluster is a crucial task when defining an authorization strategy for your setup. Might say it’s Best Practice™ to restrict access on a network level and with some sort of authn + authz logic.

You can use some sort of VPN solution (Wireguard, OpenVPN) or restrict access via IP whitelisting (Load Balancer / K8s Service / Ingress / NetworkPolicy) on the Networking Part. The authorization side can be handled by Istio with a custom external authorization system using OIDC: in this guide we use oauth2-proxy for that. It has a wide range of supported Identity Providers and is actively maintained. With this setup we won’t need any app code changes at all and can even add auth for tools not supporting it out of the box (think Prometheus Web UI or your custom micro frontend).

Istio 1.9 introduced delegation of authorization to external systems via the CUSTOM action. This allows us to use the well known oauth2-proxy component to be used as authentication system in our Mesh setup. But the CUSTOM action also comes with restrictions:

It currently doesn’t support rules for authentication fields like the JWT claim. But we won’t need this handled by Istio as oauth2-proxy itself will evaluate if an allowed group is found in the groups claim.

This way you can leverage oauth2-proxy to authenticate against your Identity Provider (e.g. Keycloak, Google) and even use it for simple authorization logic when evaluating the groups claim in your IdP provisioned JWT.

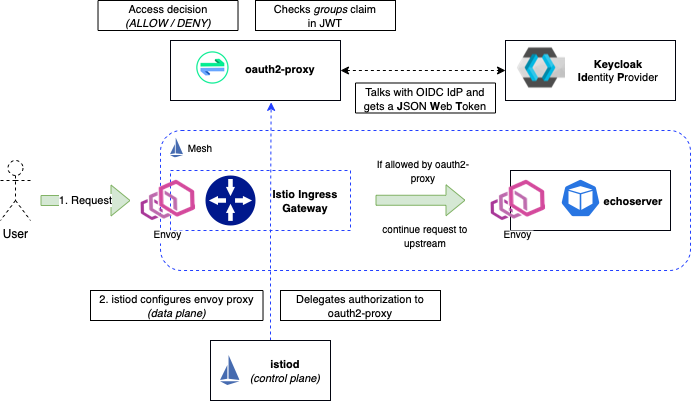

Architecture Diagram

- We have a User sending a request for lets say the Prometheus UI, Grafana, echoserver (which we will use later) or some other components in our mesh.

- The request arrives via the Istio Ingress Gateway

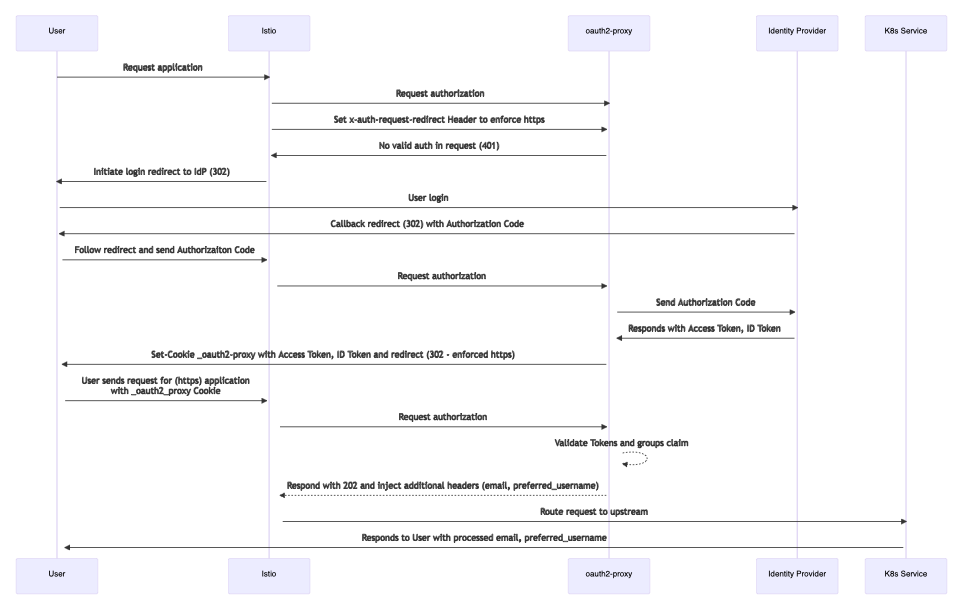

- Our Istio AuthorizationPolicy already configured the Envoy Proxy to delegate authorization to our “external” (from Istio’s view) CUSTOM auth component: oauth2-proxy.

- The oauth2-proxy is running in our K8s cluster as well and is configured to talk to our OIDC Identity Provider Keycloak (but you could use other IdPs as well). No need for oauth-proxy2 to be externally available as Istio will communicate with its K8s Service internally

- When the User authenticated successfully with our IdP, oauth2-proxy gets a JWT back and looks into the “groups” claim. This will be evaluated against any groups we have configured, let’s say “admins”. If the “admins” group is found in the JWT groups claim, oauth2-proxy puts the Token into a Cookie and sends it back to the requesting client - our User.

- The User will now be forwarded to the actual application. If the application supports it (e.g. Grafana - see BONUS section) we can configure the application to look into the headers we inject with oauth2-proxy. This allows us to set for example the

prefered_usernameoremailattributes in the application - info we get from the ID token claims.

Okta has written a nice set of articles about OIDC:

Identity, Claims, & Tokens – An OpenID Connect Primer, Part 1 of 3

echoserver

Docker Image

🐳

k8s.gcr.io/e2e-test-images/echoserver:2.5

For testing an HTTP endpoint which we can enable authentication on and to easily view all received Headers by an upstream backend server, we will use the echoserver by the Kubernetes Team (used in their e2e-test-images).

It’s based on nginx and simply displays all requests sent to it. Alternatively you could use the httpbin image, but I personally prefer the tools created by the Kubernetes project 😊.

It displays some useful information for debugging purposes:

- Pod Name (Name of the echoserver Pod)

- Node (Node where Pod got scheduled)

- Namespace (Pod Namespace)

- Pod IP (Pod IP address)

- Request Information (Client, Method, etc.)

- Request Body (if sent by the client)

- Request Headers (Useful for debugging the headers which get sent to the upstream, for example via

headersToUpstreamOnAllowwhich you will see later)

Just deploy the following YAML to your Kubernetes cluster (you will need an existing Istio installation for the VirtualService):

kubectl apply -f echoserver.yaml

The manifest includes these resources:

- Namespace: Where we deploy the echoserver resources

- ServiceAccount: Just so we don’t use the default ServiceAccount. It has no RBAC whatsoever.

- Deployment: We specify three (3) replicas so we can see the LoadBalancing of K8s in the Pod information displayed. Additionally we set topologySpreadConstraints here so Pods are distributed evenly across nodes.

- The Pod information is displayed (without the need of RBAC) via the Kubernetes DownwardAPI.

- Service: Just a plain simple K8s Service for load balancing

- VirtualService: An Istio VirtualService - update the gateways and hosts according to your setup

echoserver.yaml:

apiVersion: v1

kind: Namespace

metadata:

labels:

istio-injection: enabled

kubernetes.io/metadata.name: test-auth

name: test-auth

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: echoserver

namespace: test-auth

labels:

app: echoserver

automountServiceAccountToken: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echoserver

namespace: test-auth

labels:

app: echoserver

spec:

replicas: 3

selector:

matchLabels:

app: echoserver

template:

metadata:

labels:

app: echoserver

spec:

serviceAccountName: echoserver

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: echoserver

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: echoserver

containers:

- image: k8s.gcr.io/e2e-test-images/echoserver:2.5

imagePullPolicy: Always

name: echoserver

ports:

- containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: echoserver

namespace: test-auth

labels:

app: echoserver

spec:

ports:

- port: 80

targetPort: 8080

protocol: TCP

selector:

app: echoserver

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: echoserver

namespace: test-auth

labels:

app: echoserver

spec:

gateways:

- istio-system/istio-ingressgateway

- test-auth/echoserver

hosts:

- echo.example.com # Update with your base domain

- echo.test-auth.svc.cluster.local

- echo.test-auth

- echo.test-auth.svc

http:

- route:

- destination:

host: echo.test-auth.svc.cluster.local

port:

number: 80

FYI: Some background info about why you should prefer topologySpreadConstraints over podAntiAffinity can be found here: https://github.com/kubernetes/kubernetes/issues/72479

oauth2-proxy

Helm Chart

Helm Chart: https://github.com/oauth2-proxy/manifests/tree/main/helm/oauth2-proxy

Helm Repo: https://oauth2-proxy.github.io/manifests

Deployment of oauth2-proxy is straight forward with their official Helm Chart.

Currently oauth2-proxy is in a transition of its configuration options and introduced the alphaConfig in 7.0.0. So you end up having three ways of configuring oauth2-proxy:

- extraArgs: additional arguments passed to the oauth2-proxy command

- config: the “old” way of configuration

- alphaConfig: the new way of configuration mentioned before - this will be changed in the future / introduce breaking changes

As we are using the most recent version 7.3.0 we need to kinda mix them up all together, as not all configuration options currently support every parameter.

Session Storage

In oauth2-proxy you have two different session storage options available:

As the later requires a Redis running in your cluster, we will go the stateless route here and use cookie based storage. But be aware that Cookie based storage accounts for more traffic to be send over the wire as the Cookie with the auth data has to be sent with every request by the client.

The official docs shows an overview on how to generate a valid secure Cookie Secret (Bash, OpenSSL, PowerShell, Python, Terraform: https://oauth2-proxy.github.io/oauth2-proxy/docs/configuration/overview - we will use bash here:

dd if=/dev/urandom bs=32 count=1 2>/dev/null | base64 | tr -d -- '\n' | tr -- '+/' '-_'; echo

Save this secret as we will use it for the Helm Chart value .config.cookieSecret.

Provider

Depending on your identity provider/s (soon you can use multiple ones!) the provider config will vary. Here I show you an example for Keycloak as our Identity Provider - but you can use any OAuth provider supported by oauth2-proxy.

We do neither use the deprecated keycloak or the newer keycloak-oidc providers here. Instead we will use the OpenID Connect one to showcase how a general config for differnet providers in oauth2-proxy looks like.

You can GET the OIDC URLs for the provider config from your IdPs well-known endpoint - for Keycloak it should look something like this:

curl -s https://<keycloak-domain>/identity/auth/realms/<keycloak realm>/.well-known/openid-configuration | jq .

This will retrieve all endpoints along with other information. But we are interested in just a few of them and will save the formatted response to a shell variable:

OIDC_DISCOVERY_URL="https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/.well-known/openid-configuration"

OIDC_DISCOVERY_URL_RESP=$(curl -s $OIDC_DISCOVERY_URL)

OIDC_ISSUER_URL=$(echo $OIDC_DISCOVERY_URL_RESPONSE | jq -r .issuer)

OIDC_JWKS_URL=$(echo $OIDC_DISCOVERY_URL_RESPONSE | jq -r .jwks_uri)

OIDC_AUTH_URL=$(echo $OIDC_DISCOVERY_URL_RESPONSE | jq -r .authorization_endpoint)

OIDC_REDEEM_URL=$(echo $OIDC_DISCOVERY_URL_RESPONSE | jq -r .token_endpoint)

OIDC_PROFILE_URL=$(echo $OIDC_DISCOVERY_URL_RESPONSE | jq -r .userinfo_endpoint)

Now we configure the Helm values we will use for the deployment of oauth2-proxy. For Keycloak as our IdP our minimal configuration would look like the following YAML.

values-oauth2-proxy.yaml:

config:

cookieSecret: "XXXXXXXXXXXXXXXX" # https://oauth2-proxy.github.io/oauth2-proxy/docs/configuration/overview/#generating-a-cookie-secret

# cookieName: "_oauth2_proxy" # Name of the cookie that oauth2-proxy creates, if not set defaults to "_oauth2_proxy"

configFile: |-

email_domains = [ "*" ] # Restrict to these E-Mail Domains, a wildcard "*" allows any email

alphaConfig:

enabled: true

providers:

- clientID: # IdP Client ID

clientSecret: # IdP Client Secret

id: oidc-istio

provider: oidc # We use the generic 'oidc' provider

loginURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/protocol/openid-connect/auth

redeemURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/protocol/openid-connect/token

profileURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/protocol/openid-connect/userinfo

validateURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/protocol/openid-connect/userinfo

scope: "openid email profile groups"

allowedGroups:

- admins # List all groups managed at our your IdP which should be allowed access

# - infrateam

# - anothergroup

oidcConfig:

emailClaim: email. # Name of the clain in JWT containing the E-Mail

groupsClaim: groups # Name of the claim in JWT containing the Groups

userIDClaim: email # Name of the claim in JWT containing the User ID

skipDiscovery: true # You can try using the well-knwon endpoint directly for auto discovery, here we won't use it

issuerURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>

jwksURL: https://<keycloak-domain>/identity/auth/realms/<keycloak-realm>/protocol/openid-connect/certs

upstreamConfig:

upstreams:

- id: static_200

path: /

static: true

staticCode: 200

# Headers that should be added to responses from the proxy

injectResponseHeaders: # Send this headers in responses from oauth2-proxy

- name: X-Auth-Request-Preferred-Username

values:

- claim: preferred_username

- name: X-Auth-Request-Email

values:

- claim: email

extraArgs:

cookie-secure: "false"

cookie-domain: ".example.com" # Replace with your base domain

cookie-samesite: lax

cookie-expire: 12h # How long our Cookie is valid

auth-logging: true # Enable / Disable auth logs

request-logging: true # Enable / Disable request logs

standard-logging: true # Enable / Disable the standart logs

show-debug-on-error: true # Disable in production setups

skip-provider-button: true # We only have one provider configured (Keycloak)

silence-ping-logging: true # Keeps our logs clean

whitelist-domain: ".example.com" # Replace with your base domain

And the oauth2-proxy Helm repo and nstall the Helm Chart with our custom values file:

helm repo add oauth2-proxy https://oauth2-proxy.github.io/manifests

helm upgrade --install oauth2-proxy/oauth2-proxy --version 6.2.2 -f values-oauth2-proxy.yaml

ℹ️ What happens if oauth2-proxy is down / not available? You will receive a blank

RBAC: access deniedresponse from Istio.

Istio

Helm Chart

Helm Charts: https://github.com/istio/istio/tree/master/manifests/charts

Helm Repo: https://istio-release.storage.googleapis.com/charts

The Istio installation itself won’t be covered here but just the important configuration bits we willl need for our setup.

We need to tell Istio to use oauth2-proxy as extensionProvider in its meshConfig and set the required headers. Some important configuration options are explained here:

| Name | Description | Headers |

|---|---|---|

port | Port number of our oauth2-proxy K8s Service | |

service | Fully qualified host name of authorization service (oauth2-proxy K8s Service) | |

headersToUpstreamOnAllow | Send this headers to the upstream when auth is successful | path, x-auth-request-email, x-auth-request-preferred-username |

headersToDownstreamOnDeny | Send this headers back to the client (downstream) when auth is denied | content-type, set-cookie |

includeRequestHeadersInCheck | Send this headers to oauth2-proxy in the request | authorization, cookie, x-auth-request-groups |

includeAdditionalHeadersInCheck | Add this headers when auth request is send to oauth2-proxy | x-forwarded-for |

Headers starting with x-auth-request-* are nginx auth_request compatible headers.

| Header | Description |

|---|---|

path | Path Header |

x-auth-request-email | E-Mail of the user (email claim) |

x-auth-request-preferred-username | Username of the user (preferred_username claim). This header can be configured with e.g. Grafana to display the username (if supported). |

x-auth-request-groups | Where we store the Groups of this user (from the JWTs groups claim) |

cookie | We are using Cookie based storage, so we need this request header during the check of the request |

set-cookie | Allow to set the set-cookie header when denying the request initially |

content-type | Allow to set the Content Type when denied |

authorization | ID Token |

x-auth-request-redirect | oauth2-proxy Header to redirect client to after authentication. (Example: Enforce https but keep authority and path from the Request) |

Update Istio config

values-istiod.yaml:

meshConfig:

extensionProviders:

- name: oauth2-proxy

envoyExtAuthzHttp:

service: oauth2-proxy.oauth2-proxy.svc.cluster.local

port: 80

headersToUpstreamOnAllow:

- path

- x-auth-request-email

- x-auth-request-preferred-username

headersToDownstreamOnDeny:

- content-type

- set-cookie

includeRequestHeadersInCheck:

- authorization

- cookie

- x-auth-request-groups

includeAdditionalHeadersInCheck: # Optional for oauth2-proxy to enforce https

X-Auth-Request-Redirect: 'https://%REQ(:authority)%%REQ(:path)%'

Deploy the following Helm values in conjunction with the istio/istiod Helm Chart like this (you will need the other components too, see Istio Helm Install docs for more information).

helm upgrade --install istiod istio/istiod -n istio-system --wait -f values-istiod.yaml

With these changes deployed, we still do not have any authentication when we visit our echoserver endpoint. This is done with the next resource: an AuthorizationPolicy.

The following policy enables CUSTOM authentication via oauth2-proxy on our istio-ingress-gateway for the echoserver endpoint - just apply it with kubectl:

kubectl apply -f authorizationpolicy.yaml

authorizationpolicy.yaml:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: ext-authz

namespace: istio-system

spec:

selector:

matchLabels:

istio: ingress-gateway # adapt to your needs

action: CUSTOM

provider:

name: oauth2-proxy

rules:

- to:

- operation:

hosts:

- echo.example.com

# - another.test.com

# List here all your endpoints where you wish to enable auth

We enable authentication on the Host part of the request, but you could enable it on Path or Method, too. See the Istio Docs for more info and options.

🚩 Production Note

In a production environment, you might want to set a default deny-all AuthorizationPolicy as they are implicitly enabled (no authz policy present -→ all traffic is allowed, DENY policies evaluated first)

authz-deny-all.yaml:

apiVersion: security.istio.io/v1beta1

kind: AuthorizationPolicy

metadata:

name: deny-all

namespace: istio-system

spec:

{}

Test

Request the echoserver deployment in your browser of choice and you should now be forwarded to your Identity Provider and presented with a login. If the login is successful and you have the “admins” group listed in your JWT groups claim, you will be forwarded to echoserver and it replays all the headers sent to him and displays them to you in your browser.

Bonus

Configure Grafana to use the prefered_username and email information from headers send to it.

In the grafana.ini values of the grafana/grafana Helm Chart, just enable and configure the Grafana auth proxy:

"auth.proxy":

enabled: true

header_name: x-auth-request-preferred-username

header_property: username

headers: "Email:x-auth-request-email"

Links

- Istio: External Authorization

- Welcome to OAuth2 Proxy | OAuth2 Proxy

- https://events.istio.io/istiocon-2021/slides/d8p-DeepDiveAuthPolicies-LawrenceGadban.pdf

- Alpha Configuration | OAuth2 Proxy

- OpenId Connect Scopes

- SaaS Identity and Routing with Istio Service Mesh and Amazon EKS | Amazon Web Services

- Istio OIDC Authentication | Jetstack Blog

- Istio and OAuth2-Proxy in Kubernetes for microservice authentication

- Alternative to oauth2-proxy: https://github.com/istio-ecosystem/authservice

- Alternative to oauth2-proxy: http://openpolicyagent.org